Prompt Chaining

Imagine that instead of an LLM (a large language model), you have an assembly line to manufacture a complex product, like a car.

It would be crazy to ask a single super-worker to build the entire car from scratch. They would likely make mistakes, forget parts, or take forever.

What you do is divide the work:

- One worker puts on the chassis.

- The next one installs the engine.

- The next one puts on the wheels.

- And so on...

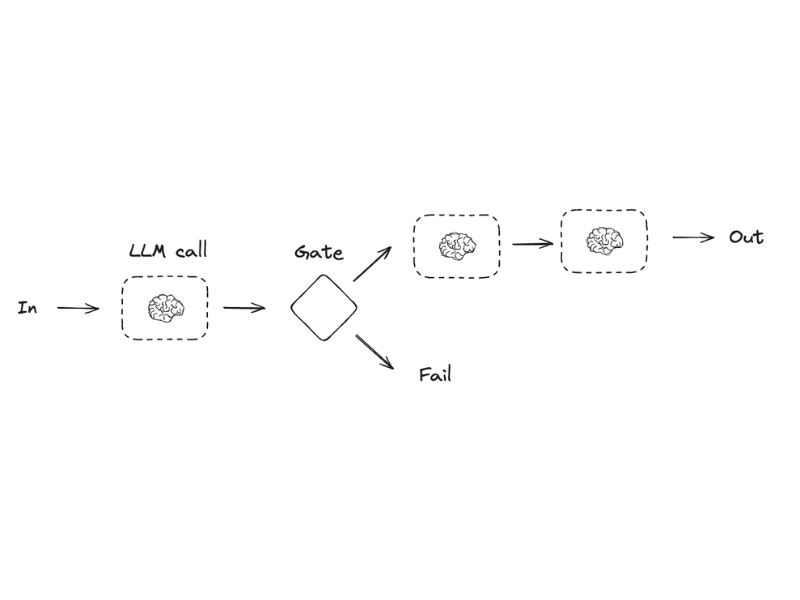

Prompt Chaining is exactly that. Instead of giving the LLM one giant, complex task in a single prompt, you divide the task into a chain of smaller, more manageable steps.

- Each LLM call is a "specialist worker" who does one thing well.

- The "output" of the first worker becomes the "input" for the next. The one who puts on the wheels needs the car with the engine already in place.

- The "Gate": This is like the quality inspector between each station. Before moving on to the next phase, a simple program (not the AI) checks if the previous work was done correctly. Is the engine properly adjusted? If not, production stops. This ensures that errors don't get carried forward.

In summary: You sacrifice a bit of speed (latency), because there are several steps, but you gain a lot of reliability and accuracy, because each step is much easier to execute correctly.

Real-World Example: "Blog Article Assistant"

Imagine you want an AI to help you write a complete article on a topic.

❌ The Wrong Approach (A single giant prompt):

"Write a complete, well-structured, and SEO-optimized blog article about 'The benefits of meditation for reducing stress,' with an introduction, three main points with examples, and a conclusion."

This approach is risky. The AI might generate low-quality text, forget a section, or not structure it well.

✅ The Right Approach (Prompt Chaining): Here we divide the task into a chain of specialists.

Step 1: The Brainstormer (LLM Call #1)

- Input:

"The topic is: The benefits of meditation for reducing stress" - Prompt:

"Generate the 3 main points or subtopics for an article on this topic. Respond only with a list." - Output (Example):

1. Reduction of Cortisol and the Physiological Stress Response. 2. Improvement of Mental Clarity and Focus. 3. Increase in Long-Term Emotional Resilience.

(This is where our "Gate" would go)

- Program Logic: Is the result of Step 1 a list? Does it have 3 points? If the answer is yes, we continue. If the AI responded with a long paragraph, we stop the process because the next step would fail.

Step 2: The Writer (LLM Call #2)

- Input: The list generated in Step 1.

- Prompt:

"Write a developed paragraph with examples for each of the following points: [Paste the list from Step 1 here]" - Output: The main body of the article, well-developed.

Step 3: The Titler (LLM Call #3)

- Input: The complete text generated in Step 2.

- Prompt:

"Based on the following article, generate 5 attractive and SEO-optimized titles." - Output: A list of possible titles for the blog.

Final Result: Instead of a single "mega-prompt" that could fail, we create a reliable chain where each specialist did their job perfectly, ensuring a high-quality final result.